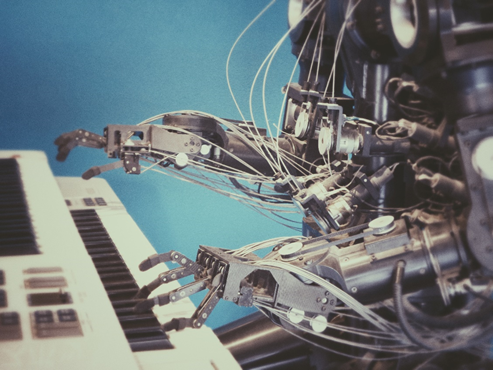

Automation is becoming increasingly prevalent in the overall workforce. Machines that accomplish tasks better, faster, and more efficient than a human being, are putting people out of work. For example, automated manufacturing devices and self-driving cars. The dilemma here is that as automation and artificial intelligence become more widespread, powerful, and affordable, many employers are choosing these options over employing real people. It has always been thought that creative jobs/skillsets would be immune to this problem. However, recent examples prove this notion to be untrue and it is scary for individuals who rely on these practices for income.

Before jumping into these examples, a basic understanding of machine learning is required. Machine learning is the way that a computer generates functions based on what it learns from experience. Human input is required for this to be utilized because a specific goal must be determined for the computer to achieve and a criterion must also be specified that the computer must follow to meet this goal. The computer then logically determines the best way to accomplish the task. The inputs are done by algorithms which are initially designed by a human, but once the ball gets rolling, these algorithms can be self-developing. For example, a computer that plays chess could edit its playing style when it discovers that certain moves are more likely to result in a loss. Advanced algorithms are being developed every day that when applied to a machine, are capable of phenomenal activities. This includes the creation of art and music.

The First Music Generated by an AI

In 1957, the first algorithm that generated a musical composition was created. Programming accreditation goes to Lejaren Hiller and Leonard Isaacson from the University of Illinois. This algorithm was able to intelligently compose and notate music which was later played by a string quartet. Click here to listen to the first piece of music composed by a computer.

Jukebox

Open AI was founded by Elon Musk, Sam Altman, Ilya Sutskever, Greg Brockman, Wojciech Zaremba, and John Schulman. The venture specializes in machine learning and developing artificial intelligence. Open AI has a program called Jukebox.

Jukebox is a prime example of how computers can learn to create music based on human input. Jukebox functions as artificial intelligence that examines and analyzes audio that is fed into it, and then outputs a procedurally generated piece of music based on what it learned from the timbre, structure, and patterns of the audio inputted. Jukebox operates on a database of over a million songs that it has listened to and categorized into genres and styles. Then based on how the audio is organized and interpreted, Jukebox outputs music that would generally fit into a certain category and follows the parameters that it algorithmically generates on its own, of said category.

Aiva

Aiva is another artificial intelligence that produces music. Its compositions are very advanced and almost indistinguishable from that of a human being’s. Aiva’s creators have made a market for it by allowing for it to be licensed to content creators for projects such as commercials, media soundtracks, video games, and more. Click here to hear some rock music composed by Aiva.

NSynth

This one is interesting. Instead of artificial intelligence that creates pieces of music, NSynth focuses primarily on sound design. Traditional synthesizers use methods such as oscillation and require human input to produce sounds. The sound generated is completely dependant on the creativity of natural intelligence. However, with NSynth, existing sounds can be fed into a series of algorithms known as a neural network. NSynth then uses the data to generate an original sound to be used as an instrument by music producers.

The potential this offers to music producers is limitless. It removes the hassle of complex sound design and opens new doors for experimentation with the infinite amount of sounds that can be generated effortlessly.

What Does This Mean for the Future of Music?

The idea that human music production might become obsolete is a scary thought. Especially to those who rely on it to make a living. Given the progress of technology and artificial intelligence in recent years, it is not hard to imagine that soon many musical services will be automated by machine intelligence. For example, a streaming platform that randomly generates original content every hour of the day, or a digital composer that generates the most emotionally resonant soundtrack for a movie in a matter of seconds.

It can be speculated that taking the human component out of music would make it less special to consumers, and slightly stunt the depth (meaning cultural attachment, overall enjoyment, and scarcity) of the industry. This would simply mean that the supply of music would outweigh the demand, but demand would always be filled, and a new equilibrium could be established by businesses outputting music in order to maximize profitability. It would be a sad circumstance because better than average music would be undervalued and underappreciated.

The plus side of artificial intelligence taking over music production would be that it would create things that no one has ever heard before, or even been able to conceive of. If one day the perfect program is mastered that creates optimal music in the most optimal way possible, it is safe to say that many people will be excited to hear what it has to offer. Theoretically, an intelligent enough computer could be given the task to create ‘the perfect song’, and it would in fact mathematically create the perfect musical product.

The outcome of a music industry run by artificially intelligent music producers would have both pros and cons, but one thing is for sure: although it is inevitable that technology will progress to this point, there still is a long way to go. The average music maker/listener – while looking forward to the positive effects of artificially intelligent music – should make the most of a world with organic music production and not take it for granted, while they still can.

Share this article to help us with engagement and SEO:

Sources

How machine learning works. (2020, May 14). Retrieved October 08, 2020, from https://algorithmia.com/blog/how-machine-learning-works

Li, C. (2019, September 24). A Retrospective of AI + Music. Retrieved October 08, 2020, from https://blog.prototypr.io/a-retrospective-of-ai-music-95bfa9b38531

Stephen, B. (2020, April 30). OpenAI introduces Jukebox, a new AI model that generates genre-specific music. Retrieved October 08, 2020, from https://www.theverge.com/2020/4/30/21243038/openai-jukebox-model-raw-audio-lyrics-ai-generated-copyright